PyTorch is an open up supply, equipment studying framework used for equally study prototyping and output deployment. According to its supply code repository, PyTorch offers two higher-amount characteristics:

- Tensor computation (like NumPy) with solid GPU acceleration.

- Deep neural networks constructed on a tape-primarily based autograd technique.

At first developed at Idiap Research Institute, NYU, NEC Laboratories The united states, Facebook, and Deepmind Systems, with enter from the Torch and Caffe2 jobs, PyTorch now has a thriving open up supply local community. PyTorch 1.10, produced in October 2021, has commits from 426 contributors, and the repository now has 54,000 stars.

This article is an overview of PyTorch, including new attributes in PyTorch 1.10 and a quick guideline to obtaining started with PyTorch. I’ve previously reviewed PyTorch 1..1 and in contrast TensorFlow and PyTorch. I recommend examining the overview for an in-depth dialogue of PyTorch’s architecture and how the library functions.

The evolution of PyTorch

Early on, teachers and researchers had been drawn to PyTorch since it was much easier to use than TensorFlow for model progress with graphics processing models (GPUs). PyTorch defaults to eager execution method, this means that its API calls execute when invoked, fairly than staying included to a graph to be operate later on. TensorFlow has due to the fact enhanced its help for eager execution mode, but PyTorch is even now preferred in the academic and analysis communities.

At this place, PyTorch is output completely ready, enabling you to transition easily between eager and graph modes with TorchScript, and speed up the path to generation with TorchServe. The torch.dispersed back close allows scalable distributed education and efficiency optimization in exploration and production, and a rich ecosystem of instruments and libraries extends PyTorch and supports improvement in personal computer eyesight, all-natural language processing, and more. Lastly, PyTorch is very well supported on key cloud platforms, such as Alibaba, Amazon World-wide-web Products and services (AWS), Google Cloud System (GCP), and Microsoft Azure. Cloud assist presents frictionless enhancement and straightforward scaling.

What’s new in PyTorch 1.10

In accordance to the PyTorch blog site, PyTorch 1.10 updates focused on improving teaching and general performance as very well as developer usability. See the PyTorch 1.10 release notes for facts. Below are a number of highlights of this release:

- CUDA Graphs APIs are integrated to cut down CPU overheads for CUDA workloads.

- Many entrance-finish APIs such as Forex,

torch.distinctive, andnn.Moduleparametrization ended up moved from beta to steady. Fx is a Pythonic platform for reworking PyTorch systemstorch.particularimplements unique functions these as gamma and Bessel functions. - A new LLVM-based JIT compiler supports automatic fusion in CPUs as nicely as GPUs. The LLVM-primarily based JIT compiler can fuse with each other sequences of

torchlibrary calls to improve general performance. - Android NNAPI assist is now accessible in beta. NNAPI (Android’s Neural Networks API) permits Android applications to operate computationally intense neural networks on the most impressive and efficient areas of the chips that electric power cell telephones, which include GPUs and specialized neural processing models (NPUs).

The PyTorch 1.10 launch incorporated around 3,400 commits, indicating a challenge that is active and targeted on strengthening performance via a wide variety of solutions.

How to get began with PyTorch

Reading the model update release notes will not likely inform you considerably if you don’t comprehend the fundamental principles of the project or how to get commenced working with it, so let’s fill that in.

The PyTorch tutorial website page gives two tracks: Just one for those people common with other deep discovering frameworks and just one for newbs. If you want the newb observe, which introduces tensors, datasets, autograd, and other vital principles, I counsel that you observe it and use the Operate in Microsoft Find out alternative, as proven in Figure 1.

IDG

IDGDetermine 1. The “newb” keep track of for discovering PyTorch.

If you’re already familiar with deep understanding ideas, then I recommend jogging the quickstart notebook shown in Determine 2. You can also click on Operate in Microsoft Learn or Operate in Google Colab, or you can run the notebook domestically.

IDG

IDGDetermine 2. The innovative (quickstart) keep track of for studying PyTorch.

PyTorch projects to observe

As proven on the still left facet of the screenshot in Figure 2, PyTorch has loads of recipes and tutorials. It also has many versions and illustrations of how to use them, normally as notebooks. Three jobs in the PyTorch ecosystem strike me as specifically fascinating: Captum, PyTorch Geometric (PyG), and skorch.

Captum

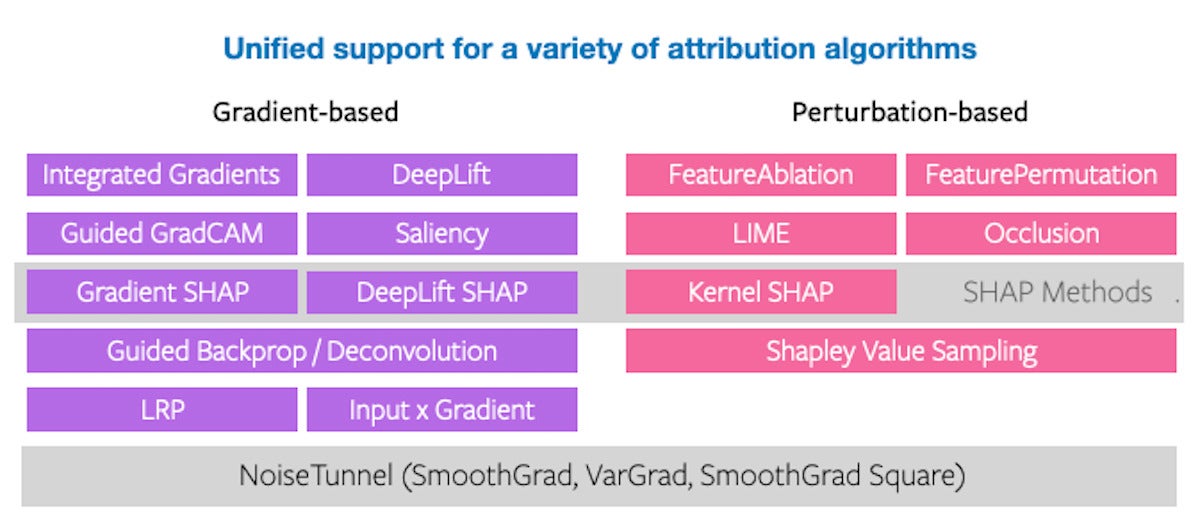

As famous on this project’s GitHub repository, the term captum implies comprehension in Latin. As explained on the repository website page and somewhere else, Captum is “a design interpretability library for PyTorch.” It incorporates a wide range of gradient and perturbation-primarily based attribution algorithms that can be made use of to interpret and fully grasp PyTorch types. It also has fast integration for types crafted with domain-certain libraries such as torchvision, torchtext, and many others.

Determine 3 demonstrates all of the attribution algorithms at this time supported by Captum.

IDG

IDGDetermine 3. Captum attribution algorithms in a table structure.

PyTorch Geometric (PyG)

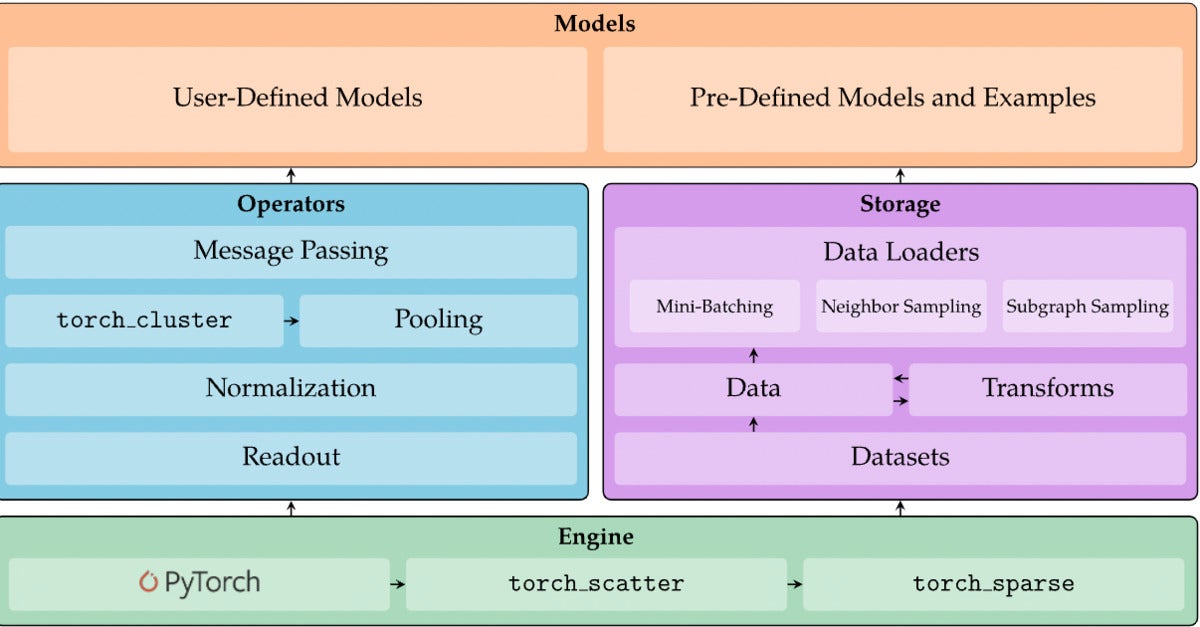

PyTorch Geometric (PyG) is a library that details researchers and many others can use to publish and teach graph neural networks for programs connected to structured facts. As explained on its GitHub repository website page:

PyG presents procedures for deep understanding on graphs and other irregular constructions, also recognized as geometric deep learning. In addition, it is made up of straightforward-to-use mini-batch loaders for functioning on many smaller and one large graphs, multi GPU-support, dispersed graph studying by using Quiver, a big variety of prevalent benchmark datasets (based mostly on simple interfaces to produce your very own), the GraphGym experiment supervisor, and beneficial transforms, the two for mastering on arbitrary graphs as well as on 3D meshes or point clouds.

Determine 4 is an overview of PyTorch Geometric’s architecture.

IDG

IDGFigure 4. The architecture of PyTorch Geometric.

skorch

skorch is a scikit-find out appropriate neural community library that wraps PyTorch. The target of skorch is to make it feasible to use PyTorch with sklearn. If you are common with sklearn and PyTorch, you really do not have to learn any new concepts, and the syntax must be nicely recognized. Also, skorch abstracts absent the coaching loop, producing a good deal of boilerplate code obsolete. A easy internet.match(X, y) is ample, as proven in Determine 5.

IDG

IDGDetermine 5. Defining and schooling a neural web classifier with skorch.

Conclusion

In general, PyTorch is 1 of a handful of leading-tier frameworks for deep neural networks with GPU help. You can use it for model development and production, you can operate it on-premises or in the cloud, and you can find numerous pre-designed PyTorch versions to use as a starting up issue for your personal versions.

Copyright © 2022 IDG Communications, Inc.

More Stories

The Convergence of Precision: A Technical Examination of Swiss Machining, CNC, and Medical Device Manufacturing

The AI Frontier: Exploring Cutting-Edge Developments in Artificial Intelligence

AI for Everyone: How Artificial Intelligence is Transforming Industries